“Let Truth and falsehood grapple,” argued John Milton in Areopagitica, a pamphlet published in 1644 defending the freedom of the press. Such freedom would, he admitted, allow incorrect or misleading works to be published, but bad ideas would spread anyway, even without printing—so better to allow everything to be published and let rival views compete on the battlefield of ideas. Good information, Milton confidently believed, would drive out bad: the “dust and cinders” of falsehood “may yet serve to polish and brighten the armory of truth”.

Yuval Noah Harari, an Israeli historian, lambasts this position as the “naive view” of information in a timely new book. It is mistaken, he argues, to suggest that more information is always better and more likely to lead to the truth; the internet did not end totalitarianism, and racism cannot be fact-checked away. But he also argues against a “populist view” that objective truth does not exist and that information should be wielded as a weapon. (It is ironic, he notes, that the notion of truth as illusory, which has been embraced by right-wing politicians, originated with left-wing thinkers such as Marx and Foucault.)

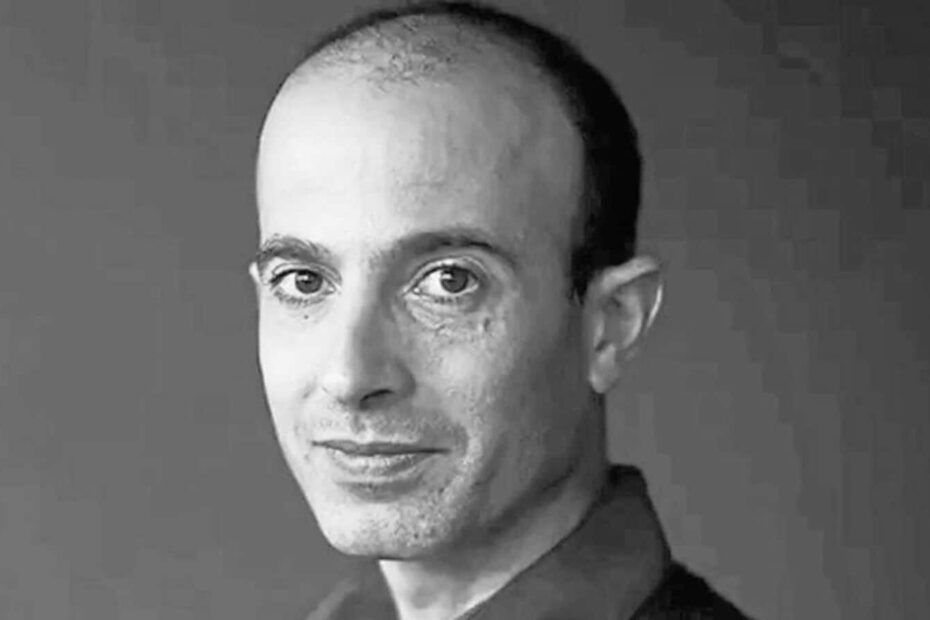

Few historians have achieved the global fame of Mr Harari, who has sold more than 45m copies of his megahistories, including “Sapiens”. He counts Barack Obama and Mark Zuckerberg among his fans. A techno-futurist who contemplates doomsday scenarios, Mr Harari has warned about technology’s ill effects in his books and speeches, yet he captivates Silicon Valley bosses, whose innovations he critiques.

In “Nexus”, a sweeping narrative ranging from the stone age to the era of artificial intelligence (AI), Mr Harari sets out to provide “a better understanding of what information is, how it helps to build human networks, and how it relates to truth and power”. Lessons from history can, he suggests, provide guidance in dealing with big information-related challenges in the present, chief among them the political impact of AI and the risks to democracy posed by disinformation. In an impressive feat of temporal sharpshooting, a historian whose arguments operate on the scale of millennia has managed to capture the zeitgeist perfectly. With 70 nations, accounting for around half the world’s population, heading to the polls this year, questions of truth and disinformation are top of mind for voters—and readers.

Mr Harari’s starting point is a novel definition of information itself. Most information, he says, does not represent anything, and has no essential link to truth. Information’s defining feature is not representation but connection; it is not a way of capturing reality but a way of linking and organising ideas and, crucially, people. (It is a “social nexus”.) Early information technologies, such as stories, clay tablets or religious texts, and later newspapers and radio, are ways of orchestrating social order.

Here Mr Harari is building on an argument from his previous books, such as “Sapiens” and “Homo Deus”: that humans prevailed over other species because of their ability to co-operate flexibly in large numbers, and that shared stories and myths allowed such interactions to be scaled up, beyond direct person-to-person contact. Laws, gods, currencies and nationalities are all intangible things that are conjured into existence through shared narratives. These stories do not have to be entirely accurate; fiction has the advantage that it can be simplified and can ignore inconvenient or painful truths.

The opposite of myth, which is engaging but may not be accurate, is the list, which boringly tries to capture reality, and gives rise to bureaucracy. Societies need both mythology and bureaucracy to maintain order. He considers the creation and interpretation of holy texts and the emergence of the scientific method as contrasting approaches to the questions of trust and fallibility, and to maintaining order versus finding truth.

He also applies this framing to politics, treating democracy and totalitarianism as “contrasting types of information networks”. Starting in the 19th century, mass media made democracy possible at a national level, but also “opened the door for large-scale totalitarian regimes”. In a democracy, information flows are decentralised and rulers are assumed to be fallible; under totalitarianism, the opposite is true. And now digital media, in various forms, are having political effects of their own. New information technologies are catalysts for major historical shifts.

Dark matter

As in his previous works, Mr Harari’s writing is confident, wide-ranging and spiced with humour. He draws upon history, religion, epidemiology, mythology, literature, evolutionary biology and his own family biography, often leaping across millennia and back again within a few paragraphs. Some readers will find this invigorating; others may experience whiplash.

And many may wonder why, for a book about information that promises new perspectives on AI, he spends so much time on religious history, and in particular the history of the Bible. The reason is that holy books and AI are both attempts, he argues, to create an “infallible superhuman authority”. Just as decisions made in the fourth century AD about which books to include in the Bible turned out to have far-reaching consequences centuries later, the same, he worries, is true today about AI: the decisions made about it now will shape humanity’s future.

Mr Harari argues that AI should really stand for “alien intelligence” and worries that AIs are potentially “new kinds of gods”. Unlike stories, lists or newspapers, AIs can be active agents in information networks, like people. Existing computer-related perils such as algorithmic bias, online radicalisation, cyber-attacks and ubiquitous surveillance will all be made worse by AI, he fears. He imagines AIs creating dangerous new myths, cults, political movements and new financial products that crash the economy.

Some of his nightmare scenarios seem implausible. He imagines an autocrat becoming beholden to his AI surveillance system, and another who, distrusting his defence minister, hands control of his nuclear arsenal to an AI instead. And some of his concerns seem quixotic: he rails against TripAdvisor, a website where tourists rate restaurants and hotels, as a terrifying “peer-to-peer surveillance system”. He has a habit of conflating all forms of computing with AI. And his definition of “information network” is so flexible that it encompasses everything from large language models like ChatGPT to witch-hunting groups in early modern Europe.

But Mr Harari’s narrative is engaging, and his framing is strikingly original. He is, by his own admission, an outsider when it comes to writing about computing and AI, which grants him a refreshingly different perspective. Tech enthusiasts will find themselves reading about unexpected aspects of history, while history buffs will gain an understanding of the AI debate. Using storytelling to connect groups of people? That sounds familiar. Mr Harari’s book is an embodiment of the very theory it expounds.

© 2024, The Economist Newspaper Limited. All rights reserved. From The Economist, published under licence. The original content can be found on www.economist.com